Read this article when you want to start exploring available data sources, types, structures, and models. Exploring the data landscape is often an underestimated task. This is mainly because companies lack a good understanding of their data structure.

In our current age of digitization, data is growing at an astonishing rate, and AI is predicted to have a dramatic impact on our society and workplaces. Well, AI is already impacting a range of real-world scenarios. But these scenarios are relatively narrow, limiting the impact of AI to a selected set of use cases.

When we scale up and start to use AI in more comprehensive applications, AI implementations often fail. For example, you may start to use AI to support your everyday decisions and assess complex data.

This is not a novel scenario, it’s very similar to the issues other IT projects face like ERP and CRM implementations, for example. When you start to scale up the size and complexity of the problem you’re dealing with, your problems also grow exponentially.

Data is key to resolving these issues. Yet, the term “data is the new corporate currency” is thrown around regularly nowadays. So, how are you going to capitalize on this data? The obvious answer is to “mine” your data.

However, we would say you need to “mind” your data to get the best data models (and results) possible.

In fact, the data model is the one element consistently underestimated by today’s enterprises. If you ever want to truly benefit from AI in your organization, you must understand the data models you use.

This issue crops up time and time again. When embarking on any data initiative, you must understand the data, and ask your information experts the following question: Do we have a data model for our key systems?

The definition of a data model, according to Wikipedia, is:

“A data model (or datamodel) is an abstract model that organizes elements of data and standardizes how they relate to one another and to properties of the real-world entities.”

OK, in defense of every IT professional out there, creating a data model is a challenging task. The size and complexity of your systems, both on and off the cloud, are all conspiring against you.

For any type of analytics project, basic or advanced, understanding your data structures is just the starting point. To create accurate decision support processes and systems, you need a deep understanding of these data structures and how your business processes are reflected in the data.

But data is now a big and difficult beast to tame. The activities of any Data Scientist are split roughly into 90% data and 10% science tasks. Extracting, cleaning, combining, and transforming data is the most resource-intensive challenge in many AI (and BI) initiatives.

This is one of the big paradoxes of data science. On the one hand, we evangelize the gospel of AI and, on the other hand, we seem to forget the fundamentals of good data management.

For any kind of advisor or consultant, your data is the starting point – that is whether you are handing out a simple piece of advice or planning a major digital transformation.

So, when embarking on any data science initiative, the first question to ask is: “Can you show me the data model?”

8 CONSIDERATIONS WHEN EXPLORING DATA SOURCES

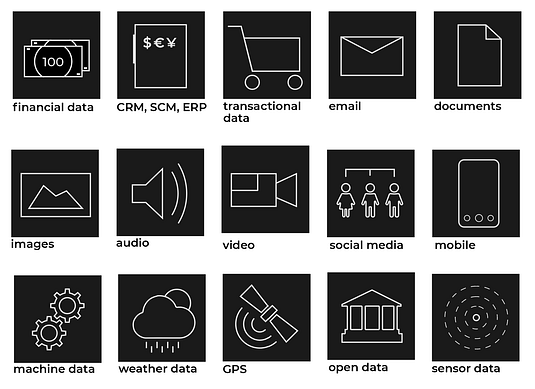

Data is everywhere. Sometimes, it is structured and placed in ERP and CRM systems. But a lot of data is unstructured. This includes data from sensors, audio, video, or pictures, for example. Unstructured data must be converted into structured data so it can be used in your AI and machine learning models.

These are elements to consider when you start exploring the data landscape:

QUALITY Data quality is obviously an important parameter to decide which sources are usable. It is more difficult to determine the data quality when the source is external and not used. A good starting point is to gain an understanding of how the data has been gathered. You can also request a small sample of the data to assess its quality. Data quality is easier to determine when the source has been used. A new assessment of the quality might sometimes then still be required. This is because the data quality is not one-dimensional: the quality might be sufficient for one purpose, but insufficient for another.

BIAS Bias is a special case of data quality. Here, the data may not represent the target population. Models that are based on biased data lead to inaccuracies.

LINKING MULTIPLE SOURCES When different sources are combined, they must contain a common key or way to derive this key.

COSTS Costs are incurred for both internal and external data sources. For external sources, there is the cost of purchasing the data, but there are also other (indirect) costs. These include the cost of extracting and preparing the data, for example, and the long-term costs of maintaining this data.

STRUCTURED VS. UNSTRUCTURED To convert the unstructured data into structured data, advanced techniques are required, such as NLP. Such processes take time and effort, incurring extra costs and additional expertise.

DATA PRIVACY Data sources must be evaluated for personal data and other sensitive information. This requires additional work (e.g. pseudonymization and anonymization) and incurs extra costs.

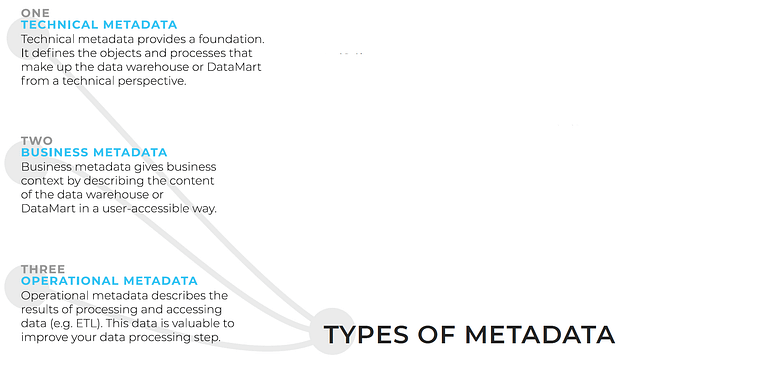

METADATA Metadata is often described as “data about data”. Metadata can refer to the description of technical data such as tables, fields, and data types. It can also be a description of the content to help you understand it or find the data source.

REAL-TIME VS. HISTORICAL The frequency of your data points, as well as the amount of historical data, are key to consider when you are investigating data sources. Sometimes real-time data is essential, often it is enough to have a good quality range of historical data points.

3 REASONS TO USE EXTERNAL DATA

With many data science projects, internal data will suffice. In some cases, it is necessary to acquire external data. This is because:

ONE The quality of the internal data is insufficient and must be corrected.

TWO You need to enrich internal data with information, which is not available in the internal data sources. For example, the internal data is enriched with external data where the original external data can be structured or unstructured.

THREE The organization requires information about non-customers or the wider competitive landscape. This information can facilitate targeted sales or marketing efforts or perform detailed market analysis.

It is important that a technical description of the data is provided. This may include information like the file type and, for each field, a description, data type, and length of the field. This “data about data” is called metadata. It is essential for external data and internal data. Ralph Kimball defined metadata in his book as “all the information that defines and describes the structures, operations, and contents of the Data Warehouse system “.

5 DIMENSIONS OF BIAS

“People generally see what they look for, and hear what they listen for.” – To Kill a Mockingbird, Harper Lee

AI and Data Science adoption rates are soaring as more organizations pursue a data-driven agenda. But have you stopped to consider the ethics of AI? It is a complex undertaking, with many businesses struggling to apply ethical considerations in their day-to-day work.

‘Bias’ is a term that often gets thrown around, stalling data-driven initiatives, complicating project implementations and confusing stakeholders.

Bias is an important consideration when reviewing potential data sources. Bias is best described as “when data is not representative of the population of interest”. Bias can be introduced when the data is gathered. For example, the data may not have been randomly collected and certain groups will be over- or under-represented, compared to the population of interest. This is selection bias.

In the case of surviving bias, one group (the failures) is not taken into account at all. Models using biased data result in inaccurate predictions.

Wikipedia defines bias as:

“Bias is a disproportionate weight in favor of or against an idea or thing, usually in a way that is closed-minded, prejudicial, or unfair. Biases can be innate or learned. People may develop biases for or against an individual, a group, or a belief.[1] In science and engineering, a bias is a systematic error. Statistical bias results f rom an unfair sampling of a population, or f rom an estimation process that does not give accurate results on average.”

To estimate the extent to which a data source is biased, it is important to understand how the data has been collected. Bias can also be caused by prejudice or human bias. This type of bias is more difficult to detect.

So, how can your organization achieve the right balance between the ethics of AI and achieving your business objectives? In this section, we’ll focus on a few important elements of bias, explaining how your business can embrace AI and Data Science in an ethical manner for digital success.

Let’s unpack the basics of that statement and explain why they are important from a Data Science context.

ONE – DATA CONDITIONS

Good quality data is not important for good AI, right? Wrong. Ask any experienced data scientist and they’ll tell you the same thing: to make accurate (and therefore ethical) decisions based on your data, the quality of your data is essential.

Another misconception is that data is objective. Bias within data, however, can lead to incorrect conclusions or reinforce existing prejudices within your data. As such, the state of your data and your data management efforts are incredibly important. Data privacy and data security are, therefore, vital boundary conditions for ethical data usage.

From another perspective: you may think all databases are biased since, by their very nature, they are a selection of datasets (and cannot include everything). However, it is more important to understand the basics of your data sample, including how your selection of data (i.e. your database), and/or its sub-selections relate to one another.

TWO – MODEL CONDITIONS

You must take data bias and quality into account at the modeling stage. Bias can show up in the data and it can also be introduced when you select attributes for an AI model.

The transparency of your model matters. You must have justifiable reasons to opt for a more powerful but less transparent model. The good news is that transparency is not impossible to achieve. You can increase the transparency of, for example, a complicated neural network model by analyzing its operation or function, or by introducing human supervision.

Either way, an AI model must be auditable to ensure the output of the model or to ensure the steps leading to the model are replicable. To achieve this, an external company or your internal teams can conduct an audit.

THREE – DATA SCIENTIST CONDITIONS

Whatever project you are working on, it is unethical to act against your existing policies, rules, or regulations.

This tenet also applies to data science. But you must have a clear accountability agreement in place to provide a consistent approach to ethics across your team. Your data scientists must also work in a proportional and transparent manner, adopting the least intrusive data strategies and clearly documenting your policies, rules, and regulations.

FOUR – IMPACT ON STAKEHOLDERS

Your AI and Data Science project has a people impact on both your employees and the data owners.

You should allow employees to provide feedback across the project lifecycle, including after deployment. You should also allow data owners to report any suspected issues. You may also need to make special considerations around the impact of your data project on vulnerable groups.

Accessibility is another consideration where people should have access to your AI products and services. This will safeguard certain groups within society, ensuring they are not discriminated against when your AI-based technologies are used in the wider world.

FIVE – IMPACT ON COMMUNITY

From a social, environmental, and democratic perspective, data projects must have a positive impact on our community. Cambridge Analytica’s use of Facebook data during the 2016 US election is a clear example here of what not to do.

You should also apply one final consideration: the headline check. If you cannot easily justify your data project in one simple sentence, you may want to leave it on the drawing board.

Bias is a station on many different journeys.